Discord Faces Legal Heat: New Jersey Sues Over Alleged Child Safety Failures

In a significant legal move, New Jersey’s Attorney General Matthew Platkin, in coordination with the state's Division of Consumer Affairs, has filed a lawsuit against Discord. The suit claims the company has misled the public, especially children and parents, about the safety measures in place on its popular messaging platform, which is widely used by gamers and young users. Filed in the New Jersey Superior Court, the lawsuit accuses Discord of violating state consumer fraud laws by creating a false sense of security.

The legal complaint outlines how Discord allegedly failed to provide transparent information about the risks children face on its platform. According to the lawsuit, the company did not effectively enforce its minimum age restriction and used unclear or complicated safety settings that may have misled users. It further argues that these measures lulled parents and young users into a false belief that the platform was safe, even as children were allegedly being exploited online.

The complaint describes Discord's actions as deceptive and harmful, claiming that the company knowingly allowed its users—particularly minors—to be exposed to inappropriate content. Lawyers called the company’s conduct “unconscionable” and “abusive,” asserting that Discord's practices were in direct conflict with public policy. These allegations focus heavily on the notion that Discord prioritized growth over user safety, particularly that of children.

A spokesperson for Discord responded to the lawsuit by rejecting the claims. The company expressed surprise over the legal action, emphasizing that it has made ongoing investments in safety tools to protect its users. Discord also stated that it has actively engaged with the Attorney General’s office in recent times, making the lawsuit’s timing and nature unexpected from their perspective.

Central to the lawsuit is Discord’s age verification system, which the plaintiffs argue is easily bypassed by underage users. Children under thirteen can reportedly gain access to the platform by simply entering a false birthdate. This loophole, the lawsuit argues, undermines the company’s claims of maintaining a safe environment for young users and violates consumer protection standards.

The lawsuit also brings into question Discord’s Safe Direct Messaging feature. It alleges that the platform falsely claimed this tool could automatically detect and remove explicit content from private messages. In reality, according to the complaint, messages between users labeled as “friends” were not scanned at all. Even when the filters were enabled, harmful content, including child exploitation material and violent imagery, allegedly continued to reach children.

As part of the legal proceedings, the New Jersey Attorney General is pursuing civil penalties against Discord, though specific financial amounts have not been disclosed. The case is one of several recent lawsuits by state attorneys general targeting social media platforms for allegedly failing to protect young users and for implementing misleading or unsafe features.

This lawsuit adds to a growing trend of legal pressure on major tech firms. In 2023, over 40 attorneys general filed a bipartisan lawsuit against Meta, accusing the company of deploying addictive features on Facebook and Instagram that negatively impacted children’s mental health. These cases suggest increasing scrutiny of how digital platforms manage youth safety.

Discord isn’t alone in facing such allegations. In September 2024, the New Mexico Attorney General sued Snap, claiming Snapchat’s design made it easy for predators to conduct sextortion schemes. In October of the same year, TikTok was targeted by a group of state attorneys general for allegedly misleading users about its safety features and for monetizing harmful features that affect children, such as virtual currency and livestreaming.

By early 2024, top executives from Discord, Meta, Snap, TikTok, and X were summoned to testify before a Senate hearing, where lawmakers criticized the companies for their apparent failure to safeguard minors. This hearing emphasized growing bipartisan concern over the role of social media in exposing young users to danger, and it marked a turning point in the legal and regulatory landscape for tech companies operating in youth spaces.

What's Your Reaction?

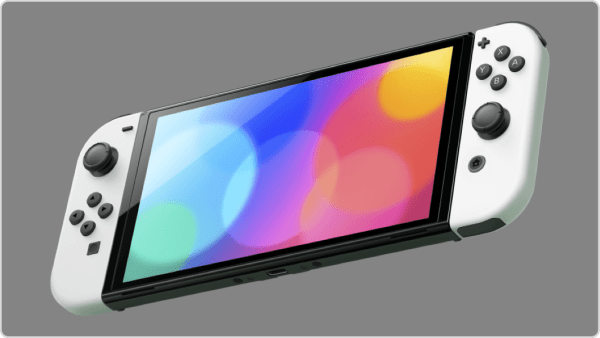

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70136881/1347078605.0.jpg)